How Does Claude 100K Work? [2024]

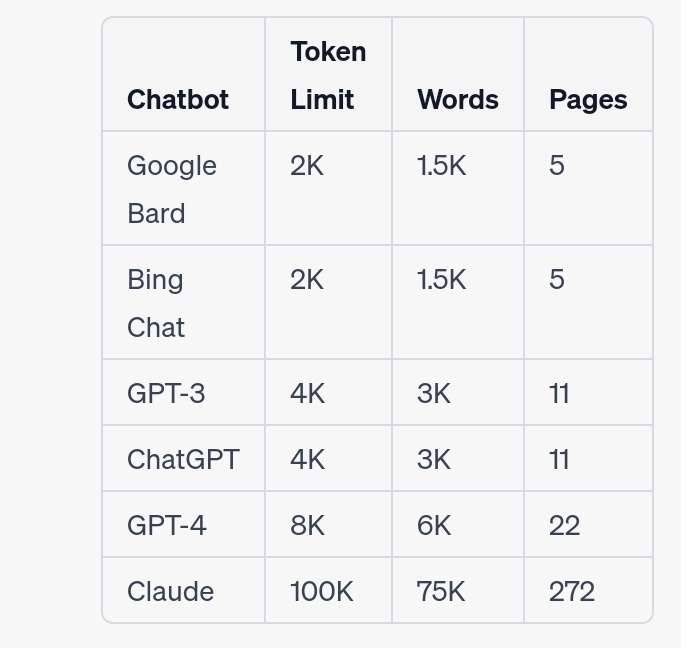

How many books would it take to teach an AI like Claude 100K? Anthropic’s conversational agent was trained on a massive 100,000 novels, textbooks and conversations to gain a broad base of knowledge and master natural dialog. In this post, we’ll explore the inner workings of Claude 100K through its large-scale unsupervised training process, neural model architecture and specially-designed safeguards that allow for beneficial general-purpose discussion.

Training Process

Through self-supervised learning, Claude 100K was able to learn linguistic patterns by predicting missing words across millions of sentences without manual labeling. This exposed it to real examples of how humans communicate, ask questions, show empathy and aim to be helpful from its 100,000 book training corpus.

Some key aspects of Claude’s training process include:

- Context window-based learning: By predicting masked words using the context around it, Claude learns relationships like predicting “dog” for the sentence “The man walked his __ on the beach”.

- Knowledge encoding: All the patterns and knowledge Claude learns are encoded in its parameters, essentially numerical representations of its insights.

- Broad base of knowledge: The extensive range of fiction, non-fiction and conversational data gives Claude abilities to converse on thousands of topics.

Model Architecture

Claude 100K employs a Transformer-based neural network architecture utilizing attention mechanisms. Some aspects include:

- Attention allows context understanding: Attention allows the model to focus on relevant parts of lengthy passages to understand language dependencies better than older architectures.

- Decoder-only design: The decoder stack structure enables Claude to generate text token-by-token while attending to contextual meaning.

- Parameter size – With 100 billion parameters, Claude has large model capacity allowing for remembering facts, common-sense reasoning and coherent long-form dialog generation.

Safety Features

| Feature | Description |

| Preference Learning | Through rewards, Claude learned being nice, truthful and non-hurtful is best when talking. |

| Content Filter | Certain mean, scary or illegal replies got deleted so Claude stays suitable. |

| Feedback System | People can report problems to developers so they help Claude improve talking kindly. |

| Admitting Unsure | If Claude doesn’t know, it says so instead of guessing – helping be right. |

| No Private Info | Claude doesn’t save names or details when talking, protecting privacy. |

| Block Function | Users can pause talking anytime if wanting to, keeping in control. |

Anthropic developed several techniques to ensure Claude remains safe and beneficial in conversation:

- Preference learning – Through rewards, Claude learns to align with human values like being helpful, harmless and honest.

- Content filtering – Potentially unsafe responses are removed to avoid encouraging harm.

- Continuous improvement – A feedback system allows flagging issues to strengthen capabilities and safety over time.

- Transparency – Claude will admit lack of knowledge to avoid providing incorrect information unintentionally.

Capabilities

With its broad training, some of Claude 100K’s dialogue abilities include:

- Discussing topics from history to science, entertainment to current events knowledgeably

- Engaging in philosophical debates and providing considered opinions

- Using humor, exploring different perspectives while avoiding stubbornness

- Looking up additional context when uncertain to enhance helpfulness

- Redirecting inappropriate requests and modifying troublesome behavior

Use Cases

Potential applications of Claude 100K’s conversational abilities include:

- Customer service question answering and account lookups

- Education as a study aid explaining concepts and assisting with homework

- Accessibility help through capabilities like text-to-speech, caption generation, and voice command task completion

- Entertainment through discussing movies, books, music or generating original stories

- Daily life assistance with tasks like scheduling, smart home device control, local business discovery

Future Plans

Anthropic aims to expand Claude’s skills in safe, beneficial ways through:

- Larger models trained on more data to strengthen understanding, reasoning and language abilities

- Enhancing conversation qualities like empathy, flow and personableness

- Continuing to improve logical inference and common sense knowledge

- Scaling AI safety techniques as capabilities grow further

- Additional language models for global users beyond English

FAQs:

An AI chatbot trained by Anthropic with 100 billion parameters on 100,000 books to be a safe, general-purpose conversation partner.

Through a Transformer-based neural network trained with self-supervised learning on a massive text corpus to encode contextual knowledge and linguistic patterns.

Engage in natural discussions across thousands of topics at an adult level through its broad base of common-sense understanding.

Nearly any domain is within Claude’s capabilities due to its diverse book training data exposing it to science, history, arts and more.

While not at human level intelligence yet, Claude displays strong language skills, reasoning abilities and personableness comparable to an exceptionally knowledgeable individual.

It aims for an approachable, considerate friendly persona focused on respectful dialogue free of bias or stubbornness.

Techniques like preference learning,content filtering and transparency protocols help ensure responses stay beneficial, truthful and prevent encourages harm.

Anthropic, an AI safety startup devoted to building artificial intelligence to be helpful, harmless and considerate through techniques like Constitutional AI.

Conclusion:

Innovations like Claude 100K show how artificial intelligence can act safely and for good when imbued with knowledge and thoughtfulness. Through its extensive unsupervised learning from 100,000 books, nuanced model engineering, and programmed protections, Anthropic’s chatbot strives for natural discussion.

By understanding How Does Claude 100K Work we see conversational AI need not compromise humanity. With care put towards usefulness instead of only progress, even superhuman systems might someday uplift society through consideration, harmlessness and honesty. Continued conscientious development will hopefully maximize technology’s potential to empower lives benevolently through compassionate connection.