Claude Artifacts: The Mysteries of AI Assistants

At their core, artifacts are any visual, auditory, or linguistic irregularities that arise in the output of AI systems. These can manifest in various ways, such as distortions in generated images, glitches in audio recordings, or contradictory statements across multiple responses.

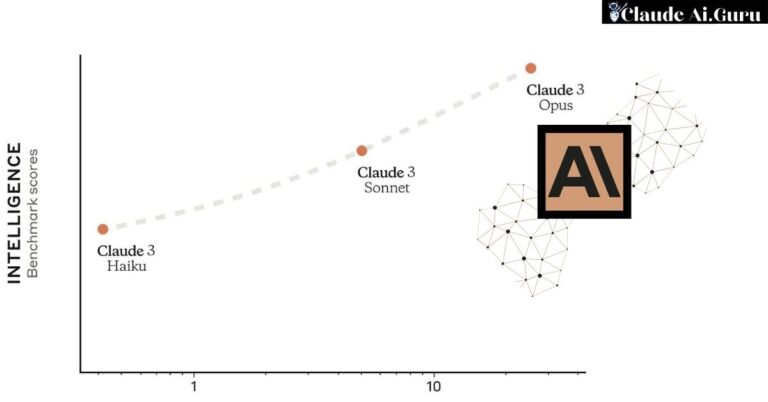

The key to understanding Claude artifacts lies in the nature of the large language models that power Claude AI assistants. These models are trained on vast troves of online data, which can sometimes contain errors, biases, or inconsistencies. When the models generate new content, these underlying issues can occasionally “leak through,” resulting in the artifacts we observe.

When and How Does Claude Use Artifacts?

Claude utilize various types of artifacts in interactions with users. These artifacts, which can manifest as visual, auditory, or language-based anomalies, are an inherent part of the way large language models function and generate outputs.

The Purpose of Artifacts

Artifacts, in the context of AI systems, serve an important purpose in the development and improvement of these advanced technologies. They provide valuable insights into the inner workings of the models, shedding light on areas that need further refinement and enhancement.

By analyzing the occurrence and nature of Claude artifacts, the Anthropic team can gain a deeper understanding of the limitations and challenges inherent in the current state of language modeling techniques. This knowledge is then used to inform and drive the ongoing research and development efforts, ultimately leading to more robust and reliable AI assistants.

How Claude Artifacts Arise

Claude artifacts can arise in a variety of ways, primarily due to the complexities involved in training large language models on vast amounts of online data. Some of the key contributors to the emergence of these artifacts include:

- Training Data Inconsistencies: The data used to train language models like Claude can sometimes contain errors, biases, or inconsistencies. When the model generates new content, these underlying issues can surface as artifacts in the output.

- Limitations of Current Techniques: Despite the advancements in language modeling, there are inherent challenges in fully accounting for all possible inputs and outputs. This can result in unexpected or contradictory responses, manifesting as language-based artifacts.

- Complexity of AI Systems: The sheer complexity of the neural networks and algorithms that power AI assistants like Claude can lead to the emergence of visual or auditory artifacts, as the systems attempt to process and generate diverse forms of content.

Addressing and Mitigating Claude Artifacts

Anthropic is deeply committed to addressing and mitigating the occurrence of Claude artifacts. Through continuous research and development, the team is constantly exploring ways to enhance the training, filtering, and output generation processes to deliver a more consistent and reliable user experience.

This includes techniques such as improved data curation, refined model architectures, and advanced post-processing algorithms. By addressing the root causes of these artifacts, Anthropic aims to minimize their presence and provide users with a more seamless and trustworthy interaction with Claude.

User Feedback: A Crucial Component

Engaging with users and incorporating their feedback is a vital aspect of Anthropic’s efforts to improve Claude and address the challenges posed by artifacts. By actively soliciting user input and reports on any issues encountered, the team can gain valuable insights to drive further enhancements and refinements.

This collaborative approach allows Anthropic to continuously enhance the capabilities of Claude, ensuring that the AI assistant becomes an increasingly reliable and transparent partner in the digital realm.

Conclusion

As the field of artificial intelligence continues to evolve, the presence of Claude artifacts serves as a testament to the ongoing challenges and opportunities that lie ahead. By understanding the purpose, causes, and mitigation strategies surrounding these anomalies, we can collectively work towards a future where AI assistants can deliver consistently reliable and enriching experiences.